In today’s digital landscape, the ability to generate keywords from text is essential for SEO, content creation, and academic research. Identifying the terms and phrases that best represent your content can improve search engine rankings, streamline research endeavors, and guide effective content strategies. Modern advancements in natural language processing for keyword extraction make this process simpler and more accurate than ever, with tools like Eskritor standing out as prime examples of innovation.

In this guide, we’ll explore the importance of text analysis for keyword generation, delve into various techniques—including term frequency analysis for keywords and using TF-IDF for keyword extraction, and discuss semantic analysis for generating keywords in context. We’ll also highlight the best tools for generating keywords from text and provide a step-by-step tutorial for keyword generation.

Why Generate Keywords from Text?

Keyword extraction underpins a variety of professional tasks—from improving website rankings to categorizing research documents. Below are some core reasons why this process is vital for modern content and data strategies.

1. Optimize Content for SEO

Keywords form the backbone of any effective search engine optimization strategy. By identifying the most relevant terms used by your target audience, you can optimize blog posts, landing pages, and other online content to rank higher in search results. This not only increases traffic but also enhances user engagement by aligning your content with reader intent.

2. Enhance Research Efficiency

For students, researchers, and data analysts, keyword extraction can significantly reduce the time spent sifting through large documents or academic papers. By highlighting the main ideas and terminology, you can quickly categorize findings, locate important references, and even track trends across multiple studies. Tools like Google Scholar further aid in discovering related research topics and articles.

3. Improve Content Strategy

Understanding which keywords resonate with your audience—be they long-tail keywords for niche industries or broad terms with high search volumes—helps you plan your content calendar more effectively. This ensures each piece you produce speaks directly to what your readers or customers are searching for, ultimately boosting conversions and user satisfaction.

4. Automate Manual Processes

Gone are the days when teams had to manually skim through pages and pages of text to identify recurring themes and phrases. Modern keyword extraction tools save time and reduce human error by automating the entire process, allowing you to focus on higher-level tasks like strategy, analysis, and execution.

5. Faster Results

Processing large volumes of text manually is cumbersome. An AI solution like Eskritor can analyze hundreds of pages in mere minutes, enabling you to iterate quickly and make data-driven decisions faster.

Common Techniques for Generating Keywords from Text

Different techniques cater to various analytical needs, whether you want a simple frequency count or a deeper semantic understanding. Let’s explore the most widely used methods and how they help extract meaningful keywords.

1. Term Frequency Analysis

A foundational method, Term Frequency (TF), identifies the most commonly used words or phrases within a text. While this approach can surface obvious keywords, it doesn’t account for the uniqueness or specificity of those terms across multiple documents.

2. TF-IDF (Term Frequency–Inverse Document Frequency)

TF-IDF refines basic term frequency analysis by factoring in how important a term is across a set of documents. Words that appear frequently in one document but rarely in others receive a higher score, making this technique excellent for pinpointing more specialized or context-specific keywords.

3. Semantic Analysis

Semantic analysis identifies contextually relevant phrases by examining the relationships between words rather than just frequently used ones. This is particularly useful if you want to capture synonyms, related terms, or thematically linked ideas rather than repetitive words that may not carry significance.

4. NLP-Based Approaches

Advanced NLP techniques like Named Entity Recognition (NER) and topic modeling go beyond simple word counts. NER identifies people, places, organizations, and other specific entities, which can be crucial keywords in journalism or business analysis. Topic modeling reveals broader themes within a text, making it easier to categorize large volumes of data.

Best Tools for Generating Keywords from Text

Keyword extraction can be done using a range of specialized tools and platforms. Below is a look at some leading options, each suited for different user requirements—from SEO optimization to comprehensive data analysis.

1. Eskritor

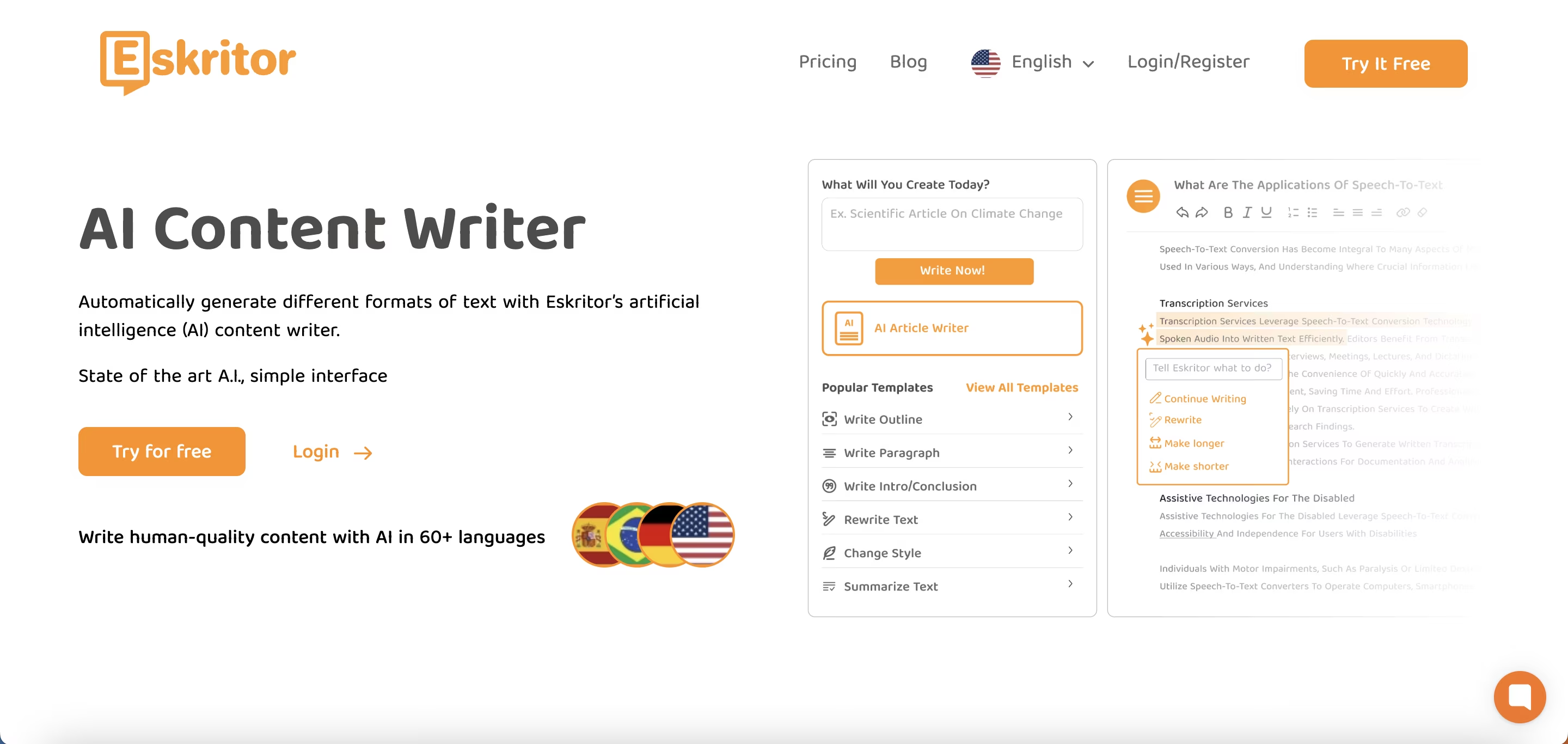

Eskritor is an advanced, AI-driven writing platform designed to simplify and enhance the process of keyword extraction for users across various fields—whether you’re optimizing for SEO, developing marketing campaigns, or conducting academic research. By harnessing powerful natural language processing (NLP) algorithms, Eskritor automatically identifies crucial terms and phrases in your text, highlighting both frequency-based keywords and context-driven insights.

Key Features

- AI-powered writing tool tailored for SEO, marketing, and academic use

- Simple interface for analyzing text and generating actionable keywords

Why It Stands Out

- Speeds up keyword analysis with advanced NLP algorithms

- Offers customizable options for frequency filtering and semantic analysis

2. Google Keyword Planner

A staple for digital marketers, Google Keyword Planner aligns suggested keywords with search volume data. While it’s primarily for PPC campaigns, it also helps content creators refine their topics based on real user queries. The integration with Google Ads provides direct insights into how often specific terms are searched, giving you a head start in crafting content that matches user intent.

3. MonkeyLearn

Ideal for text analysis, MonkeyLearn provides keyword extraction using powerful NLP APIs. It excels at categorizing feedback, reviews, or social media chatter, making it valuable for brands focusing on sentiment and trend analysis. MonkeyLearn’s dashboard includes options for real-time processing, meaning you can set it up to continuously analyze incoming data—perfect for fast-paced marketing teams or customer support operations.

4. R and Python Libraries

For users comfortable with programming, libraries like Tidytext (R) and spaCy (Python) enable in-depth, customizable keyword extraction. You can implement TF-IDF, topic modeling, and sentiment analysis for highly specialized or large-scale projects.

These libraries offer the flexibility to fine-tune parameters or integrate with machine learning frameworks, making them ideal for data scientists or researchers requiring a fully tailored text analysis pipeline.

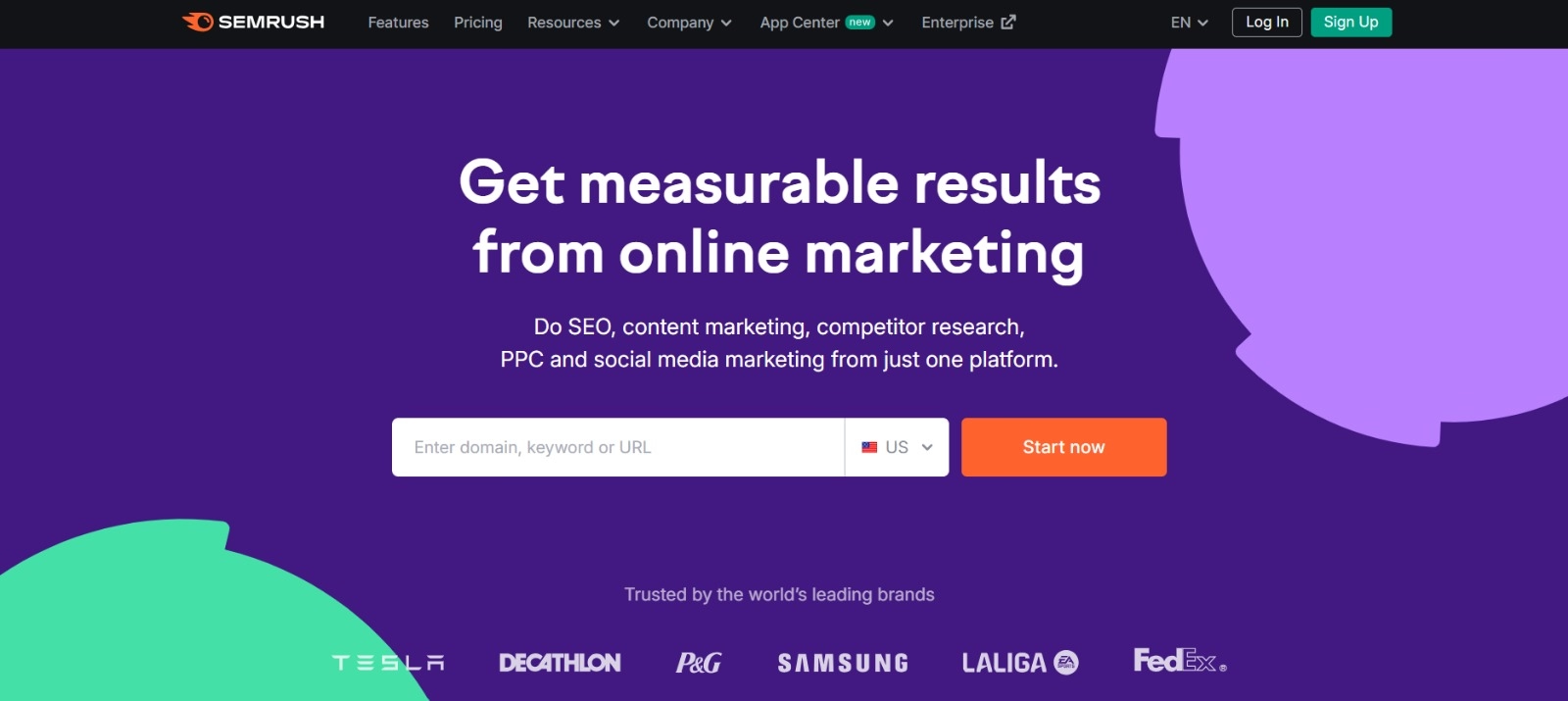

5. Semrush

A popular choice among SEO professionals, Semrush combines keyword research with competitive analysis. Its focus on search volume, difficulty scores, and competitor strategies helps refine content plans for maximum visibility.

Beyond keyword suggestions, Semrush provides domain-level insights, such as backlink profiles and traffic analytics, enabling a holistic approach to SEO and digital marketing tactics.

Step-by-Step Guide to Generating Keywords with Eskritor

When it comes to straightforward yet powerful keyword extraction, Eskritor offers an excellent balance between usability and advanced NLP features. Here’s how to make the most of its platform.

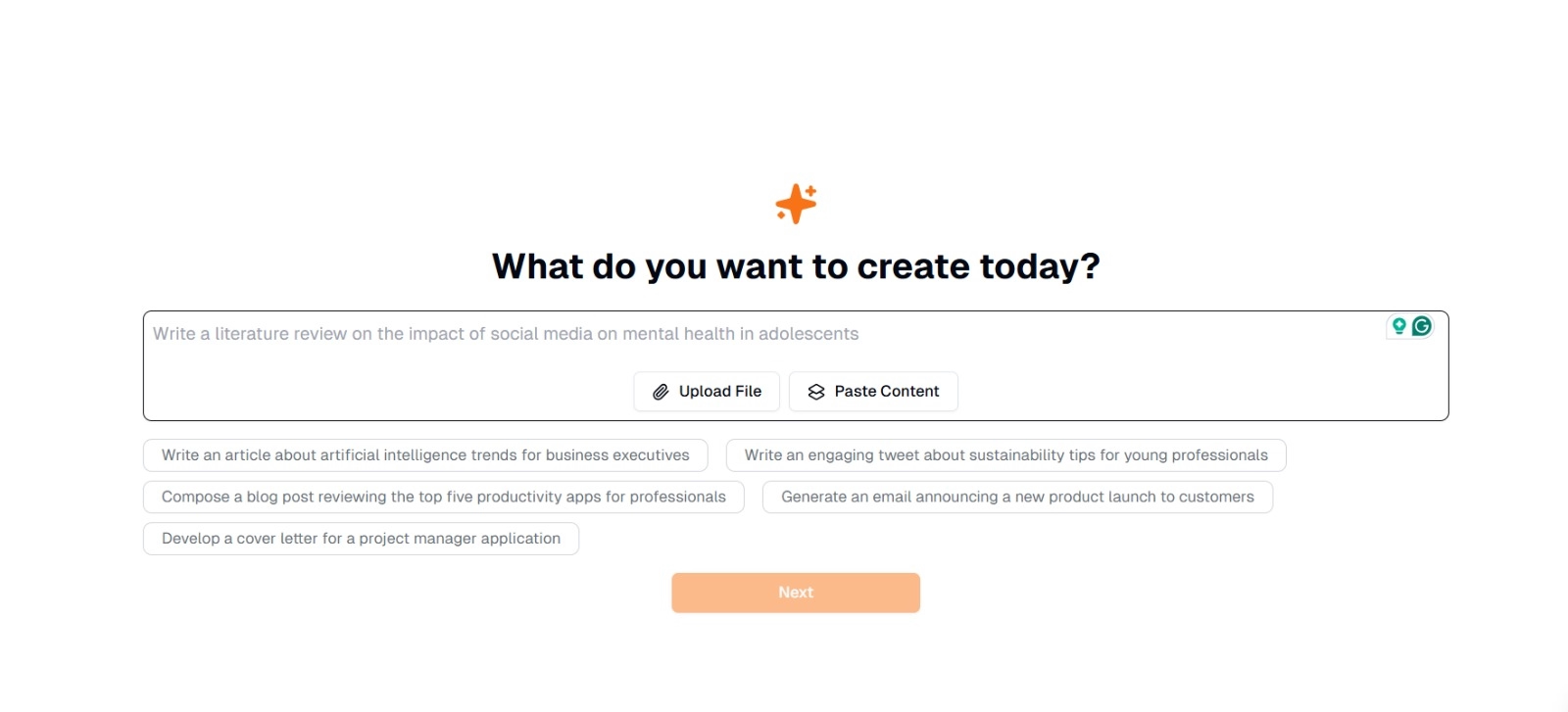

Step 1: Enter or Upload Your Text

On Eskritor’s main interface, you’ll see a prompt asking, “What do you want to create today?” Here, you have two options:

- Paste Content: Copy and paste your text directly into the given text box.

- Upload File: Choose a document (e.g., .docx, .pdf, .txt) that contains the text you want to analyze.

Tip: You can also select from suggested prompts (e.g., “Write an article about artificial intelligence trends...”) if you plan to generate or rework text before extraction.

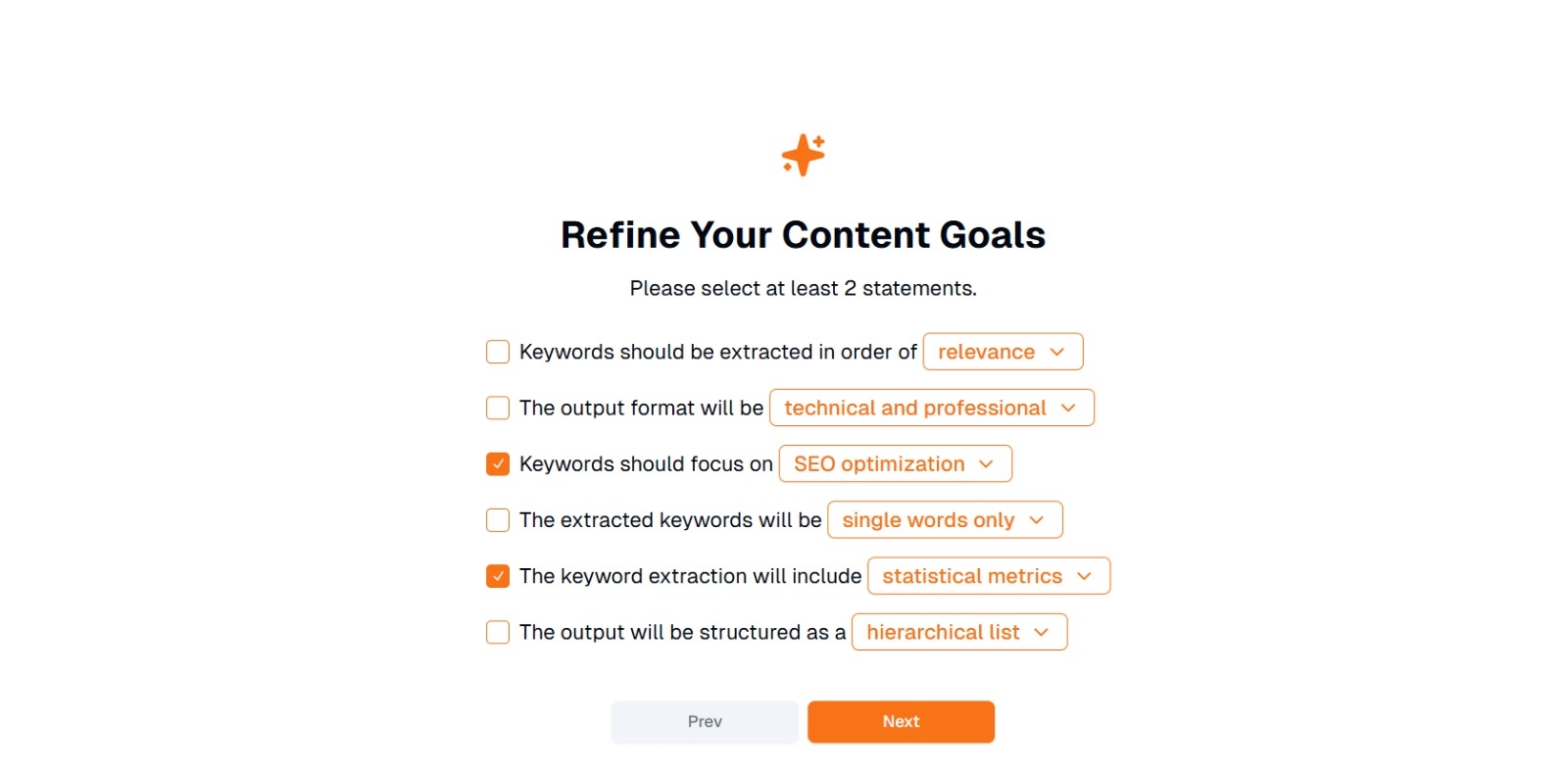

Step 2: Refine Your Content Goals

You’ll be prompted with “What do you want to do with this content?” and asked to select at least 2 statements. Also, you can write what you want to do to chat. Options may include:

- Keywords should be extracted in order of: (e.g., relevance, frequency, alphabetical, etc.)

- The output format will be: (e.g., technical and professional, casual and friendly)

- Keywords should focus on: (e.g., SEO optimization, academic research, marketing angles)

- The extracted keywords will be: (e.g., single words only, multi-word phrases)

- The keyword extraction will include: (e.g., statistical metrics, semantic grouping)

- The output will be structured as: (e.g., a hierarchical list, simple bullet points)

Tip: Select the statements that best match your project’s needs. For instance, if you’re optimizing a blog post for search engines, consider checking SEO optimization, single words only, and extracted in order of relevance.

Step 3: Click “Next” to Generate Keywords

After refining your goals and making the desired selections, click Next (or a similar button) to begin the keyword extraction. Eskritor’s AI will process the text, applying NLP methods such as term frequency analysis, TF-IDF, and semantic analysis (as applicable) to identify the most relevant keywords.

Step 4: Review the Extracted Keywords

Eskritor will present a list (or hierarchy) of keywords based on the criteria you selected. The display might include:

- Keyword List: Arranged by relevance, frequency, or in a hierarchical format.

Tip: Look out for keywords that may need merging (e.g., singular vs. plural forms) or removal (e.g., overly generic terms).

Step 5: Export or Apply the Results

Finally, export the keyword list or incorporate it directly into your workflow:

- Export Options: Download in PDF, docx, or HTML.

- Use Cases: Integrate into SEO tools, content planning documents, research outlines, or marketing dashboards.

Tips for Effective Keyword Generation

Ensuring that your keyword extraction is both accurate and aligned with your goals requires strategy. Here are some best practices to consider.

1. Clean and Preprocess the Text

Remove stop words, special characters, and irrelevant data before analysis to avoid clutter. Tools like NLTK (Python) or certain text editors can automate this preprocessing step.

2. Balance Frequency and Relevance

A keyword isn’t just about how many times it appears—it’s also about contextual importance. Combine frequency metrics with semantic analysis to strike the right balance.

3. Use a Mix of Techniques

Leverage multiple approaches—TF-IDF, semantic analysis, or named entity recognition—to capture a fuller range of relevant keywords and minimize blind spots.

4. Continuously Test and Refine

Keyword trends can change over time, especially in fast-moving industries. Regularly update your keywords based on new data, performance metrics, or evolving audience needs.

Conclusion: Simplify Keyword Generation with AI

Generating effective keywords is a pivotal part of modern content strategies, research workflows, and data analysis tasks. AI and NLP-driven tools like Eskritor have made it simpler than ever to extract, refine, and export high-value keywords in a fraction of the time it would take manually.

By combining multiple techniques—TF-IDF, semantic analysis, topic modeling, and more—you gain a comprehensive understanding of what truly matters in your text. This insight fuels better SEO outcomes, more focused research, and engaging marketing materials. In short, AI-based keyword extraction is no longer optional; it’s a necessity for professionals who value efficiency, precision, and data-driven decision-making.

location

location